Interdisciplinary Initiatives Program Round 7 - 2014

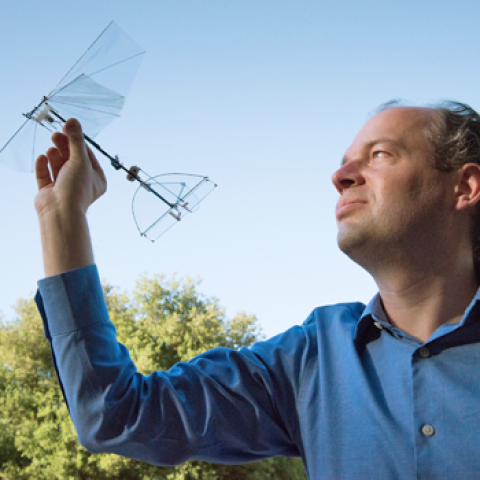

David Lentink, Mechanical Engineering

Eric Knudsen, Neurobiology

A major role of vision is to enable rapid navigation through complex environments. This function requires precise coordination of head and eye movements, in order to stabilize the visual scene during perturbations that result from rough, or moving, terrain and the locomotory actions themselves. To this end, the brain coordinates input from both the eyes and the gravitation/acceleration sensors in the inner ear. This complex information-processing task is supported by various poorly understood physiological adaptations. Due to technical limitations, vision research to date has been conducted mainly on restrained animals, in which the brain is less active and motion information from the inner ears is absent. Nevertheless, recent studies have revealed that visual information processing is dramatically boosted during active locomotion. We propose to observe neural activity in brain centers that are responsible for sensory-motor control, in unrestrained animals, while simultaneously recording the position and orientation of the head and eyes. We will make precise measurements in birds that are flying freely in an advanced avian wind tunnel, in which the visual scene and air turbulence are under experimental control. The laboratory of Eric Knudsen will measure how visual information in the brain changes during sensory-motor challenges. Complementary experiments by David Lentink’s lab will assess how the dynamics of visual and inner ear inputs are used to control flight. Together, our laboratories will develop equipment that enables wireless measurements of brain activity and of eye and head motion in freely moving animals. Although these methods will first be applied to birds, the technology will be available as open hardware for sensorimotor research on other unrestrained vertebrates. Our findings will transform our understanding of how the brain controls locomotion, specifically how the brain responds adaptively to motion signals during unexpected perturbations. In addition, the results will yield much-needed control strategies for search and rescue robots flying in turbulent wind.