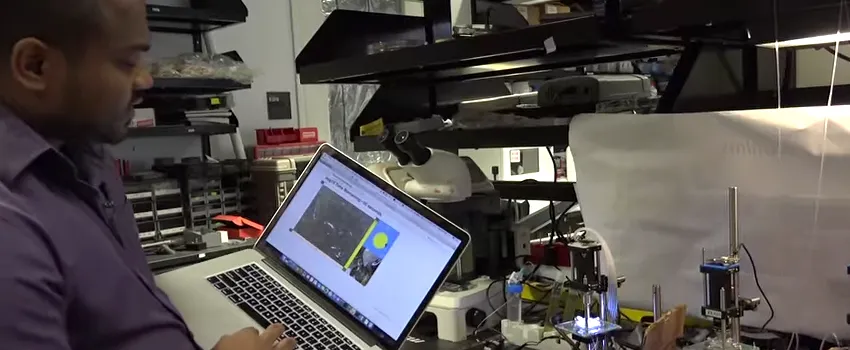

Screenshot from video by Marc Franklin: The Interactive Biology Cloud Lab could one day empower millions of students to learn in new and more imaginative ways.

Stanford News - December 7th, 2016 - by Andrew Myers

For as long as biology has been a cornerstone of scientific education, laboratory instruction for students has begun with an introduction to the wonders of one-celled creatures under a microscope. The students learn the form and function of the various cell parts – the flagella, the organelles, the membranes, etc. – and dutifully catalog what they see. They observe passively, never interacting with the cells.

Now, researchers at Stanford University have brought together bioengineers and educators to develop an Internet-enabled biological laboratory that allows students to truly interact with living cells in real-time, potentially reshaping how students learn about biology.

This interactive Biology Cloud Lab, as the researchers have dubbed their prototype, could one day empower millions of students to learn in new and more imaginative ways. The team, led by Ingmar Riedel-Kruse, an assistant professor of bioengineering, and Paulo Blikstein, assistant professor of education, published its work in Nature Biotechnology.

“What has been missing from early biological lab experiences has been that last bit of basic science – the ability to truly interact and experiment with living cells so that students experience first-hand how cells behave in reaction to external stimuli,” Riedel-Kruse said.

“Labs in most schools are stuck in the 19th century, with cookbook-style experiments,” Blikstein said, adding, “Biology Cloud Labs could democratize real scientific investigation and change how kids learn science.”

How it works

The Biology Cloud Lab gives students and teachers remote control software to operate what the researchers call biotic processing units (BPUs). Each BPU includes a microfluidic chip containing communities of microorganisms. Around each chip, four user-controlled LEDs allow students to apply different types of light stimuli. A webcam microscope livestreams the chip’s content.

The authors used a common single-celled organism called Euglena. Euglena seek out light to turn it into energy, but they are also repelled when the light grows too intense. It is this behavioral contrast that forces students to hypothesize why Euglena behave as they do, providing a deeper understanding of the scientific process that is the very point of early lab work.

Students can control the lab from any internet-enabled computer, tablet or smartphone. Today they can issue commands to shine the LEDS this way or that and observe how the Euglena behave. The researchers plan to add other microorganisms and stimuli; the concept of remotely controlled, interactive learning would also apply to physics, chemistry and other fields.

Even with this prototype the researchers are thinking big. They believe that 250 of their biotic processing units, installed in a single 100-square-meter room and networked with a one-gigabit-per-second Internet connection, could serve a million students each year. At that scale, each experiment would cost just one cent.

“We have optimized the technology to keep the cells stable and responsive over weeks. This makes the Biology Cloud Lab low-cost and scalable for basic education,” Riedel-Kruse said. “And we also implemented interfaces for experiment automation, data analysis and modeling to enable deep inquiry.”

Science at scale

Several states are starting to follow the Next Generation Science Standards, the new guidelines for K-12 science education in the United States. Many other countries are reforming their science standards due to the increasing need for scientific literacy in the 21st century.

“Many of these international standards have proven hard to implement in real-world classrooms due to logistical and cost factors, especially for life sciences,” Blikstein said. “The Biology Cloud Lab will put many of the most sophisticated NGSS practices within reach of students for the first time.” The researchers explained that the system includes software that makes it easy for students to analyze and visualize the data, test hypotheses and program the system to run hundreds of experiments automatically.

The team tested its prototype, located on the Stanford campus, with a group of ten self-paced university students working from their homes in a college-level biophysics class. A second test with middle-school cohorts involved live, in-classroom experiments. These were projected on a wall where one student controlled the light stimuli through a joystick as the class discussed results and suggested various experimental variations.

“The technical challenges were considerable,” said Zahid Hossain, first-author and doctoral candidate in computer science who designed the hardware and cloud architecture. “To make the biotic processing units work we had to develop new algorithms so that many, many users can run experiments over an extended time.”

Learning outcomes

Engin Bumbacher, a graduate student at the School of Education and a co-author on the paper, remarked on the learning outcomes.

“The students visibly enjoyed the interactive experiments, noting behaviors and trying many light sequences,” Brumbacher said. “They engaged in rich discussions, analysis and modeling, all in a single online lesson.“

In a third trial the Stanford researchers collaborated with Professor Kemi Jona at Northeastern University to integrate the Biology Cloud Lab into an educational content management system that allows teachers to personalize their lesson plans. Then students from a Chicago middle school successfully ran their experiments.

“We are doing to biology what Seymour Papert did to computer programming in the 1970s with the Logo language,” Blikstein said. “The Biology Cloud Lab makes previously impossible activities easy and accessible to kids – and maybe also to professional scientists in the future.”

Riedel-Kruse and Blikstein are both members of Stanford Bio-X.

This research was supported by two National Science Foundation Cyberlearning grants, two Stanford Graduate Fellowships, and the Lemann Center at Stanford University.