Stanford Report - November 9th, 2009 - by Ingfei Chen

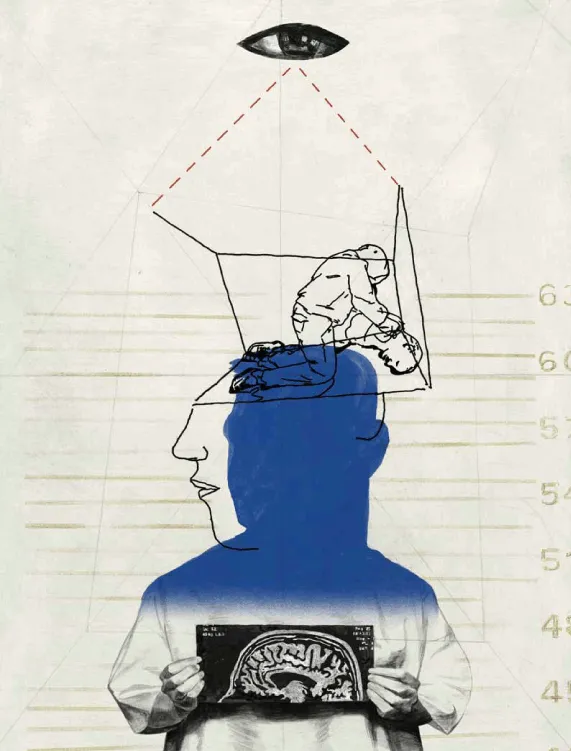

Will advances in neuroscience make the justice system more accurate and unbiased? Or could brain-based testing wrongly condemn some and trample the civil liberties of others? The new field of neurolaw is cross-examining for answers.

In August 2008, Hank Greely received an e-mail from an International Herald Tribune correspondent in Mumbai seeking a bioethicist's perspective on an unusual murder case in India: A woman had been convicted of killing her ex-fiancé with arsenic, and the circumstantial evidence against her included a brain-scan test that purportedly showed she had a memory—or "experiential knowledge"—of committing the crime. "I was amazed and somewhat appalled," recalls Greely (BA '74), the Deane F. and Kate Edelman Johnson Professor of Law, who has studied the legal, ethical, and social implications of biomedical advances for nearly 20 years. As a type of lie detector, the supposed memory-parsing powers of the Brain Electrical Oscillations Signature profiling test—which monitors brain waves through electrodes placed on the scalp—looked implausible, he says.

No studies of BEOS, as it's called, have been published in peer-reviewed scientific journals to prove it works.

Maybe society will someday find a technological solution to lie detection, Greely told the Tribune, "but we need to demand the highest standards of proof before we ruin people's lives based on its application."

While it remains unclear whether the guilty verdict in the Indian case will be upheld, the idea that a murder conviction rested in part on the premature adoption of an unproven novel technology by a judicial system makes Greely uneasy. The case is the sort of potentially disastrous scenario that he and colleagues in the budding field of "neurolaw" seek to head off in the U.S. legal system.

In the United States, concerns about a similar brain-scanning lie detection technology, based on functional magnetic resonance imaging (fMRI), have been swirling around since two companies, No Lie MRI and Cephos Corp., began offering commercial testing using the technique in 2006 and 2008, respectively. While reservations abound about the reliability of these brain scans, the decision of whether courts accept them as evidence will initially rest upon the discretion of individual judges, on a case-by-case basis.

In the past two decades, neuroscience research has made rapid gains in deciphering how the human brain works, building toward a fuller comprehension of behavior that could vastly change how society goes about educating children, conducting business, and treating diseases. Powerful neuroimaging techniques are for the first time able to reveal which parts of the living human brain are in action while the mind experiences fear, pain, empathy, and even feelings of religious belief.

"Anything that leads to a better, deeper understanding of people's minds plays right to the heart of human society and culture—and as a result, right to the heart of the law," says Greely. "The law cares about the mind."

The brain science revolution raises the tantalizing sci-fi–like prospect that secrets hidden inside people's heads—like prejudice, intention to commit a crime, or deception—are within reach of being knowable. And such "mind reading" could have wide-ranging legal ramifications.

Although the scientific know-how is not here yet, someday brain scans might provide stronger proof of an eyewitness's identification of a suspect, confirm a lack of bias in a potential juror, or demonstrate that a worker compensation claimant does, in fact, suffer from debilitating pain. Neuroimaging evaluations of drug offenders might help predict the odds of relapse and guide sentencing. And new treatment options based on a better grasp of the neural processes underlying addictive or violent behavior could improve rehabilitation programs for repeat lawbreakers.

As director of the Stanford Center for Law and the Biosciences (CLB) and the Stanford Interdisciplinary Group on Neuroscience and Society, Greely has provided critical analysis of the societal consequences of genetic testing and embryonic stem cell techniques. In recent years, as he has turned his gaze to brain science, Stanford has emerged as a leader in the neurolaw field, with the CLB holding one-day conferences on "Reading Minds: Lie Detection, Neuroscience, Law, and Society" and "Neuroimaging, Pain, and the Law" in 2006 and 2008.

Greely along with two Stanford neuroscience professors and two research fellows has also been engaged in the Law and Neuroscience Project, a three-year, $10 million collaboration funded by the John D. and Catherine T. MacArthur Foundation since 2007. Presided over by honorary chair retired Supreme Court Justice Sandra Day O'Connor '52 (BA '50) and headquartered at the University of California, Santa Barbara, the project brings together legal scholars, judges, philosophers, and scientists from two dozen universities.

One network is gauging neuroscience's promises and potential perils in the areas of criminal responsibility and prediction and treatment of criminal behavior. A second network, co-directed by Greely, is exploring the impacts of neuroscience on legal decision making.

"A lot of the judges who are participating are just frankly baffled by this flood of neuroscience evidence they are seeing coming into the courts. They want to use it if it's good and solid, but they don't want to if it's flimflam," says Law and Neuroscience Project member William T. Newsome, professor of neurobiology at the Stanford School of Medicine. He and the other scientists are helping to educate their legal counterparts about what brain imaging "can tell you reliably and what it can't." The project will produce a neuroscience primer for legal practitioners.

Neuroscientific evidence has already influenced court outcomes in a number of instances. Brain scan data is showing some purchase in death penalty cases, after a defendant has been found guilty, says Robert Weisberg '79, the Edwin E. Huddleson, Jr. Professor of Law and faculty co-director of the Stanford Criminal Justice Center. That's because, during the penalty phase, the defendant has "a constitutional right to offer just about anything that could be characterized as mitigating evidence," he says.

"What happens here is that a lot of defense evidence that wouldn't be admissible during the guilt phase, then comes back in a secondary way." For instance, Weisberg says, to try to reduce punishment to a life sentence, some defense lawyers are presenting neuroimaging pictures to argue that organic brain damage from an abusive childhood makes their client less culpable.

But the bar for admissibility of such evidence is different in different legal contexts, Weisberg adds, and it is generally set much higher in the guilt phase during which criminal responsibility is determined. In that setting, there's a greater reluctance to consider brain-based information. "Right now the courts are very, very worried about allowing big inferences to be drawn about how neuroscientific evidence explains criminal responsibility," he says.

Still, in two cases in California and New York, defendants accused of first-degree murder successfully argued for a lesser charge of manslaughter after presenting brain scans to establish diminished brain function from neurological disorders. And the first in the next generation of evidence from brain-based technology—fMRI lie detection—is already knocking at courtroom doors, posing "the most imminent risk issue" in neurolaw, says Greely.

On the Stanford campus, Greely has been working with neuroscientist Anthony D. Wagner (PhD '97), a Law and Neuroscience Project member, and Emily R. Murphy '12 and Teneille R. Brown—who have been Law and Neuroscience Project fellows—to further investigate the Indian BEOS profiling technology. The convicted woman and her current husband (also found guilty in the case) were granted bail by an appellate court while it considers the couple's appeal of the ruling.

BEOS's inventor claims that by analyzing brain wave patterns that indicate a remembrance of information about a murder, the test can distinguish the source of that knowledge—from actually experiencing the crime, versus hearing of it in the news. But there's no scientific evidence supporting that such a feat is possible, says Wagner, a Stanford associate professor of psychology. Neuroimaging studies have shown that merely imagining events in your mind triggers patterns of brain activity similar to those that arise from experiencing the events for real.

Motivated by the case, Wagner, along with psychology postdoctoral fellow Jesse Rissman and Greely, is exploring whether basic memory recognition testing is possible with fMRI. Functional MRI looks for metabolic activity in the brain to see how different parts "light up" when an individual performs certain mental tasks while lying inside an MRI machine.

The idea of fMRI memory detection raises intriguing possibilities: Could it be used to verify whether a suspect's brain recognizes the objects in a crime scene shown in a photo, or to confirm an eyewitness's identification of a perpetrator—without the test subject even uttering a word? And, if so, how accurately? The answers aren't known.

In experiments funded by the Law and Neuroscience Project, the Stanford researchers are studying new computer algorithms for analyzing a person's neural activation patterns to see if they can be used to predict whether a face the person has seen before will be recognized. Preliminary accuracy rates look good. But, Wagner cautions, it is uncertain whether the lab findings would translate over to real-world applicability.

And that is the seemingly insurmountable sticking point with fMRI lie detection. Unlike polygraph testing, which monitors for anxiety-induced changes in blood pressure, pulse and breathing rates, and sweating that accompany prevarication, fMRI scans aim to directly capture the brain in the act of deception. About 20 published peer-reviewed studies found that certain brain areas become more active when a person lies versus when telling the truth. These experiments usually analyzed healthy volunteers who are instructed to tell simple fibs (such as about which playing card they're viewing on a screen).

Based on such research, Cephos claims an accuracy rate of 78 percent to 97 percent in detecting all kinds of lies; No Lie MRI claims 93 percent or greater. Both companies say their tests are better than the polygraph, which has a poor record of reliability that has made it inadmissible in most courts. But they haven't convinced the broader neuroscience community that the fMRI method is good enough yet to use in the real world, with all its variegated deceptions of complicated half-truths and rehearsed false alibis. Experimental test conditions are a far cry from the highly emotional, stressful scenario of being accused of a crime for which you could be sent to prison. So thus far, Wagner says, it is premature to use fMRI lie detection technology for any legal proceeding.

Nonetheless, one of the first known attempts to admit such evidence into court was in a juvenile sex abuse case in San Diego County earlier this year. To try to prove he was innocent, the defendant submitted a scan from No Lie MRI, but later withdrew it. (For details about the CLB's involvement, see its blog at lawandbiosciences.wordpress.com). No Lie's CEO, Joel Huizenga, says that he is confident the brain scan tests can pass court admissibility rules "with flying colors" if the decision isn't politicized by opponents.

But George Fisher, the Judge John Crown Professor of Law and a former criminal prosecutor, thinks the justice system won't recognize such evidence anytime soon. Trial court criteria for admitting data from a new scientific technique set stiff requirements for demonstrating its reliability, he says. In federal courts and roughly half of state courts, individual judges must apply the Daubert standard on a case-by-case basis, hearing testimony from experts on key questions: Is the evidence sound? Has the scientific technique been tested and published in the peer-reviewed literature? What is its error rate? Other state courts use the Frye test of admissibility, which requires proof that scientific evidence is generally accepted in the relevant scientific community.

Fisher's guess is that fMRI lie detection evidence "will not get past the reliability stage in most places." Attempts to reproduce real-world lying in the lab, he says, "are probably unlikely to satisfy a court when it really gets down and looks hard at these studies."

Plus, the justice system has an ideological aversion to lie detection technology: In United States v. Scheffer four Supreme Court justices said that a lie detection test, regardless of its accuracy, shouldn't be admitted into federal courts because it would infringe on the jury's role as the human "lie detector" in the courtroom. "The mythology around the system is that the jurors are able to tell a lie when they see one," says Fisher.

Greely is less sanguine that courts will keep unproven fMRI testing out. "Anybody can try to admit neuroscience evidence in any case, in any court in the country," he says, adding that busy judges are typically not well prepared to make good decisions about it.

If there were any inclination, however, for courts to accept new lie detection evidence that isn't very firmly rooted in science, it would most likely happen on the defense side rather than the prosecution's, speculates former U.S. Attorney Carol C. Lam '85, who is deputy general counsel at Qualcomm. That's because the criminal justice system is structured to give the benefit of any doubt to the defendant.

Such instances of admission, were they ever to happen, would most likely also first take place in proceedings where the judge is the only trier of fact, Lam adds; individual judges might be curious about the fMRI test and confident that they can determine the appropriate weight to give it. But Lam also notes that the defense community actually might not wish to present fMRI lie detection results in court—for fear that if this kind of evidence became widely accepted by the judicial system, prosecutors would begin to use it against criminal defendants.

The potential ethical and legal issues surrounding brain scans for deception or memory detection get thorny quickly. On one hand, everyone agrees that a highly accurate fMRI lie detection test could be a powerful weapon in exonerating the innocent, similar to forensic DNA evidence. But could prosecutors compel someone to undergo testing, or would that violate the Fifth Amendment's protection against self-incrimination? Would it violate the Fourth Amendment's bar against unreasonable searches? A broader question may be whether a right to privacy is violated if someone scans your brain to read your mind, neurolaw experts say—whether for court, the workplace, or school.

Even if fMRI lie detection's reliability remains in doubt, law enforcement and national security agents could still use it to guide criminal investigations, as they do with the polygraph. However Steven Laken, president and CEO of Cephos, points out that no one can be unwillingly interrogated by brain scan, because it currently requires significant cooperation from the subject in preparing for and undergoing the procedure.

Greely has proposed that fMRI lie detection companies be required to get pre-marketing approval from an agency like the Food and Drug Administration. Not surprisingly, Laken and Huizenga are opposed to the idea. Huizenga says that, given the enormous time and expense this would take, the idea is really a politically motivated move to stop the technology cold. Laken, however, says he is open to a discussion with Law and Neuroscience Project researchers, other scientists, and government agencies about what it would take to validate the accuracy of the technology.

As scientists unlock the mysteries of the human brain, we may learn that some people are neurally wired in ways that compel them to certain types of unlawful behavior: Their brains made them do it. How much this should lessen their culpability or punishment are weighty questions that courts would have to grapple with.

Some philosophers and neurobiologists believe neuroscience will prove that human beings don't have free will; instead, we are creatures whose actions are determined by mechanical workings of the brain that occur even before we make a conscious decision. If that's true, these thinkers argue, it could finally explode the very concept of criminal responsibility and shatter the judicial system.

But most legal scholars don't buy into that. "I think the free will conversation is the most dead-ended in all of neuroscience," says Mark G. Kelman, the James C. Gaither Professor of Law and vice dean. The debate has been going on for 2,000 years, he says, with critics of the idea that free will exists concluding long ago that human behavior is governed by the mind—not by some imagined moral entity within it—and the mind is located in the brain. It's doubtful, Kelman says, that neuroscience will add anything new to the free will criminal responsibility arguments by detailing the precise locations or processes that explain particular actions or traits, like a lack of empathy or impulse control.

Furthermore, others point out that the criminal justice system does not rely on a premise of free will. "It depends on the hypothesis that people's behavior is shapeable by outside forces," says Fisher. "And there's a big difference between saying there is no free will and saying the risk of punishment has no impact on a person's calculations about what to do next."

What is far more probable in the future, many experts say, is that one of neuroscience's biggest influences would be in revamping processes like sentencing or parole, or in forcing us to rethink such ideas as the rehabilitation of criminals, sexual predators, mentally insane convicts, or drug offenders. Although minimum sentences are mandated in many situations, judges still have some discretion in how they handle defendants in certain cases. If research led to better predictions of future behavior that could help distinguish the more dangerous lawbreakers from the safer bets, courts could make better decisions about how long a sentence to give a defendant, and whether he should be given probation or sent to prison. And, once he's in jail, when he should come out.

"If we can better evaluate what the problem is and what the chances are of controlling the defendant's behavior in the future, we're going to be better off," says O'Connor. Answers from neuroscience would be extremely welcome in decision making when defendants are committed to a mental institution. "When should a person be confined or when is it appropriate to have a person released on medication?" she asks. "There's just a need for that kind of information."

Drug addiction is another area where the law is hungry for better solutions and more effective treatments. "Our jails are overloaded, and they are overloaded with people who have committed drug crimes," says O'Connor. "So it just becomes enormously important to figure out how people get addicted to drugs and what we can do to sever that connection if we can."

Predictions of recidivism might be improved through the invention of brain imaging tools that assess whether an addict has truly broken the habit, says Newsome. For example, one possible test could be to scan the person's brain while she views video clips of people injecting heroin. If research established that such tempting scenes reliably triggered greater activity in the emotional centers of drug abusers' brains, scans taken before and after treatment could provide "an objective basis for saying, ‘This person's getting on top of their problem,' " Newsome says.

Parole boards have been moving toward taking greater account of evidence-based predictions of behavior, adds Weisberg. "It is possible that neuroscientific evidence could be used to weigh into influencing the conditions of parole or the kind of treatment program the prisoner is sent into," he says.

When it comes to rehabilitation, new treatments that seek to change criminal behavior raise their own potentially Orwellian ethical dilemmas, though. A vaccine against cocaine is in clinical trials, Greely says. If it ever reaches the market, would the legal system force coke addicts to get vaccinated—or otherwise imprison them? "Every plus here has an associated minus," he says. "You could imagine changing behaviors in good ways, or in bad ways." The thought of giving the government strong tools for altering people's behavior through direct action on the brain is, he says, "scary."

Prognosticators of neurolaw must walk a careful line in making conjectures about the future. A few years ago, a British bioethics scholar complained to Greely that the law professor's dissections of the legal implications of fMRI lie detection paid short shrift to whether the technology actually works—possibly leaving people with the impression that it will, or already does.

"That was a really good wake-up call," recalls Greely. Still, while no one knows exactly where the science will take us, he says, the goal of neurolaw is to clarify how coming discoveries might affect the legal world and to point out tensions, gaps, and areas where the law may need rethinking. Society must worry about both long-term implications of the hypothetical future and short-term realities of the present, he says. "You've got to look in both directions."